Arrcus Edge Networking Solutions on Intel® NetSec Accelerator Reference Design

September 27, 2023 | Sean Griffin

Introduction

The cost benefits of migrating applications from on-premises data centers to the cloud are substantial and well known. However, the growing footprint of modern enterprise applications did not stop at the cloud. As providers look to improve the quality of their services, applications have spread to the colocation and network edge where, just as with Cloud Service Provider (CSP) data centers, operational expenditure (OpEx) costs are extremely sensitive to the efficient use of physical space and power.

Furthermore, this expanded application footprint has led large enterprises, telcos, and service providers to have workloads distributed amongst different geo-locations and across private data centers, edge locations, colocations, and CSPs. These workloads are also deployed to both physical and virtual infrastructure with varying access models including full ownership, rental, and Infrastructure-as-a-Service (IaaS). Enterprises often have limited control of any underlying infrastructure or networking in environments that they do not own. This creates a scenario where distributed workloads are connected over disjointed, incompatible infrastructure stitched together across protocols and managed by a heterogenous set of provider specific tools and interfaces.

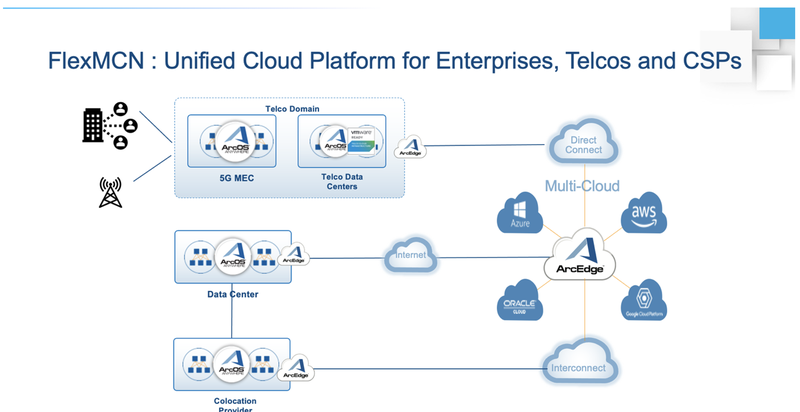

Fig. 1: FlexMCN: Unified Platform for the Distributed Cloud

Arrcus’ ArcEdge virtual router running on Intel NetSec Accelerator Reference Design compatible cards provides homogenous application connectivity to public clouds and on-premises data centers from the colocation and edge. With this combined Arrcus and Intel solution, telcos and colocation providers can carve out network slices meet the SLA requirements of high bandwidth and low latency while preserving physical space and improving overall power efficiency.

In this blog post we describe how the Intel® NetSec Accelerator Reference Design in conjunction with Arrcus’ ArcEdge router combine to reduce costs by simplifying the multi-cloud and edge network connectivity stack, improving the efficient use of space, enabling strong tenant isolation, and allowing for predictable performance all while maintaining a cost-saving low power consumption profile.

Arrcus Hybrid/Multi-Cloud Networking

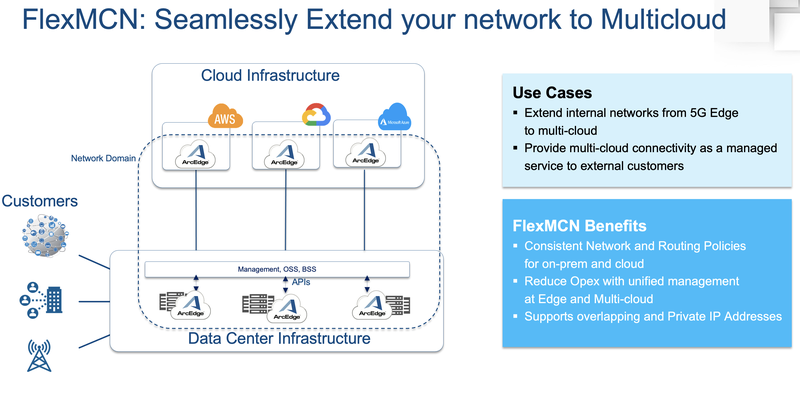

FlexMCN is one of industry’s most flexible multi-cloud networking solutions designed for cloud, colocation, and telecommunication providers to seamlessly extend their on-prem networks to multi-cloud environments or to deliver multi-cloud connectivity as a managed service.

The Arrcus FlexMCN platform includes ArcEdge which is a secure control and data plane element. ArcEdge secures and routes traffic between CSPs, regions, and sites; consequently, its operational performance directly impacts both the Quality of Service (QoS) and OpEx costs of systems that communicate across these boundaries. For the interconnection of large-scale distributed applications, it is not uncommon for operators to deploy thousands of instances of ArcEdge to environments with high application density. These factors suggest that a low-cost, high-performance compute solution, that is physically isolated from tenant workload execution, may be ideal for ArcEdge deployments in resource and space constrained environments.

Fig 2. FlexMCN extends on-premises network fabric to multi-cloud

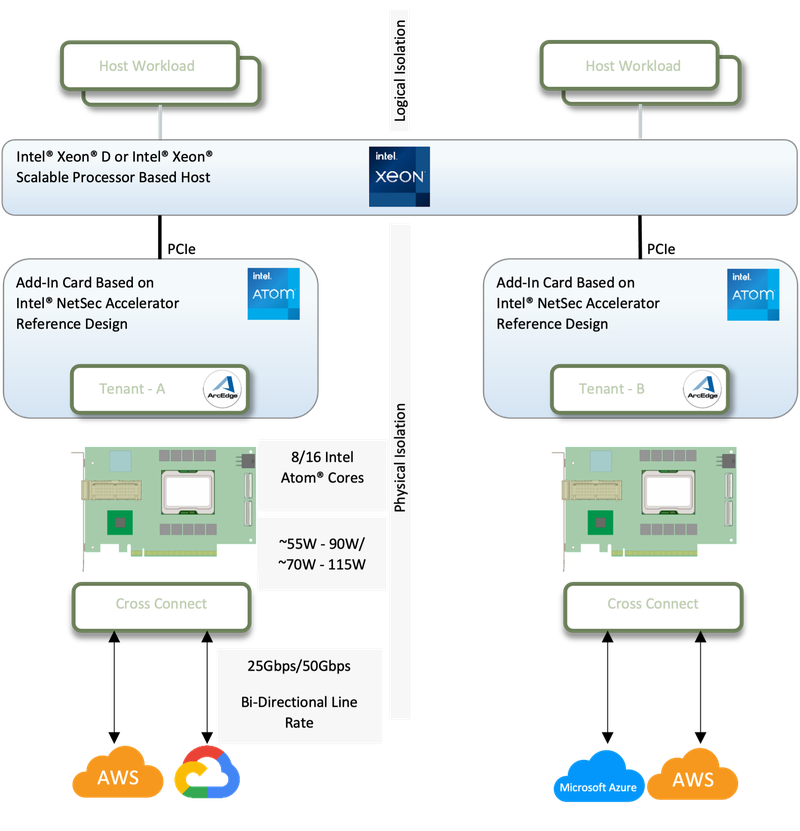

Fig. 3 Hypothetical ArcEdge Colocation Host Architecture on Intel® NetSec Accelerator Reference Design

Intel® NetSec Accelerator Reference Design

An add-in-card (AIC) based on Intel® NetSec Accelerator Reference Design can be seen as additional Intel Architecture compute packaged into a PCIe AIC form factor. The AIC based on Intel® NetSec Accelerator Reference Design enables incremental compute, improved connection density, host CPU offload, and physical space conservation. Individual cards are separate physical execution environments which allows data center operators to configure strong isolation between tenant traffic for enhanced security guarantees[1] and more predictable application performance.

An AIC based on the current version of Intel® NetSec Accelerator Reference Design is built upon Intel Atom® processor which delivers high performance for CPU intensive networking workloads while maintaining a low power consumption profile. Running at a conservative ~55W – 90W or ~70W – 115W, scaling up compute capacity with the accelerator card may have an energy efficiency advantage over scaling out to additional rackmount servers. For example, a hypothetical 1kW server with a high efficiency power supply rating of 80% can lose upwards of 200W just to the operational overhead of the power supply - an expense not paid by an AIC. By scaling up existing servers with accelerator cards, data center operators can amortize power supply loss across more compute capacity helping to increasing the overall operational efficiency of the data center.

The accelerator card comes in both 8 and 16 core SKUs meaning a typical dual card deployment can scale the compute of an existing host by up to 32 cores. The instruction set architectural consistency between the host CPU and the AIC provide infrastructure workloads, such as the ArcEdge virtual router, an easy migration path to the accelerator with minimal developer effort or none at all. Migrated applications are then physically isolated from the execution environment of the host and other AICs allowing data center providers to configure per-tenant isolation for performance sensitive infrastructure workloads on a multi-tenant host server.

Arrcus ArcEdge on Intel® NetSec Accelerator Reference Design

Fig.3 ArcEdge + Intel NetSec Accelerator Reference Design Cost Savings Dimensions

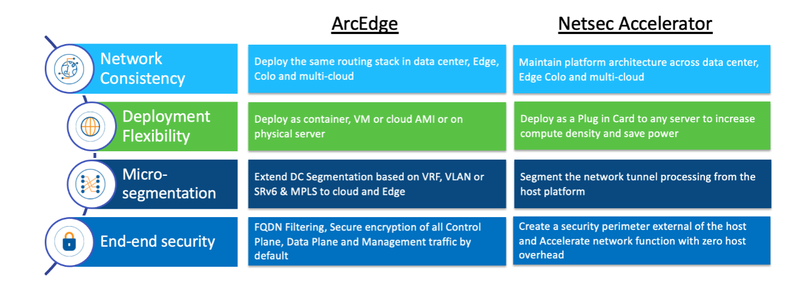

When ArcEdge is deployed to an AIC based on Intel® NetSec Accelerator Reference Design they form a mutually beneficial symbiosis across several cost-saving dimensions:

Network Infrastructure Consistency: ArcEdge allows operators to homogenize their routing stack across the on-premise data center, edge, colocation, and multi-cloud. Likewise, an AIC based on Intel® NetSec Accelerator Reference Design can homogenize compute architecture across these cross-connected environments as well as between application hosts and dedicated networking compute infrastructure. As a result of this consistency, cost savings are realized through simplified configuration and management. [2]

Deployment Flexibility: ArcEdge can be deployed as a container, cloud virtual machine image, or to bare metal. An AIC based on Intel® NetSec Accelerator Reference Design improves this versatility by giving ArcEdge yet another high-performance deployment vector, the add-in accelerator card.

End-to-End Security: ArcEdge offers FQDN filtering and various tunneling choices e.g., GRE, L2TPv3, MPLSoGRE, SRv6 and industrial grade encryption of all control and data plane traffic. Running ArcEdge on an AIC based on Intel® NetSec Accelerator Reference Design allows operators to build a security perimeter of ArcEdges that are running on the same host servers as tenant applications while maintaining physical separation[3].

Improved Versatility with Multi-Cloud Networking Automation Toolkit (MCNAT) and ArcOrchestrator

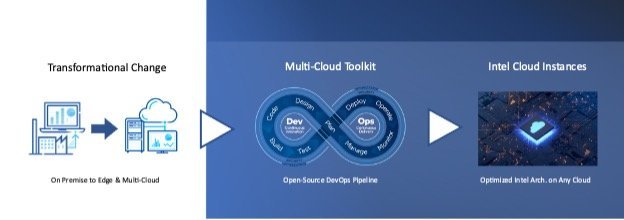

Scale implementations can extend to thousands of instances of ArcEdge deployed across multiple clouds, private data centers, and the enterprise network edge. These environments can differ fundamentally in areas such as virtualization technology and networking and compute capability. Intel has designed the Multi-Cloud Networking Automation Toolkit (MCNAT) to allow engineers and operators to quickly assess the performance of their networking workloads in varying environments while automatically applying Intel architecture best known configuration. MCNAT was instrumental in benchmarking and validating ArcEdge on Intel® NetSec Accelerator Reference Design based PCIe cards and on Intel architecture-based cloud compute instances. Please reach out to Intel to learn more about MCNAT.

Fig. 4 MCNAT Enablement of Intel Architecture-based Multi-Cloud Network Optimizations

ArcOrchestrator is the management component of FlexMCN which simplifies deployment and configuration. ArcOrchestrator enables the rapid deployment of ArcEdge to any environment from the cloud to edge and the management of security policies including Role Based Access Control (RBAC) and Access Control Lists (ACLs).

Conclusion

ArcEdge virtual routers running on AICs based on Intel® NetSec Accelerator Reference Design presents significant cost optimization opportunities for data center operators. Its modular design allows for the rapid expansion of network connectivity without adding additional servers, thus saving costs on capital acquisition, space, power, and the cooling costs associated with traditional servers. In addition, the AIC based on Intel® NetSec Accelerator Reference Design provides tenant isolation, adding an additional layer of security. AICs based on Intel® NetSec Accelerator Reference Design can scale beyond 10 Gbps of throughput achieving up to 50 or up to 100 Gbps line rate per card. By teaming multiple cards, throughputs can be achieved based on the PCIe slots available on the server.

[1] No product or component can be absolutely secure.

[2] Your costs and results may vary.

Categories

5G

ACE

AI

ArcEdge

ArcIQ

ARCOS

ARRCUS

CLOUD

datacenters

edge

FlexAlgo

hybrid

Internet

INVESTING

IPV4

IPV6

MCN

ML

multicloud

Multicloud

MUP

NETWORKING

NETWORKING INDUSTRY

Routing

SRV6

uSID